Posted: March 12th, 2010 | Author: chmullig | Filed under: Nerdery | Tags: data, picloud, python | 2 Comments »

I had to crunch some data today, and decided to experiment a bit. It mostly involved lots and lots of Levenshtein ratios. On my laptop it took over 25 minutes to complete a single run (45k rows, several thousand calculations per row) – a bummer when you want to quickly iterate the rules, cutoffs, penalties, etc. First step was simply cutting out some work that was a nice-to-have. That got me down to 16 minutes.

Second was adding multiprocessing. I figured this would be easy, but the way I originally wrote the code (the function required both an element, and a penalty matrix) meant that just plain multiprocessing.Pool.map() wasn’t working. I wrapped it up with itertools.izip(iterator, itertools.repeat(matrix)), but that gives a tuple which you can’t easily export. It turns out that this, basically calculatorstar from the Pool example, is a godsend:

def wrapper(args):

return func(*args)

So that got me down to 8 minutes on my laptop. However the cool part is that on an 8 core server I was down to only 1 minute, 30 seconds. Those are the sorts of iteration times I can deal with.

Then I decided to try the PiCloud. After trying it out earlier this week I thought it would be interesting to test it on a real problem that linearly scales with more cores. They advertise it in the docs, so I figured maybe it would be useful and even faster. Not so fast. It was easy to write after I already had multiprocessing working, but the first version literally crashed my laptop. I later figured out that the “naive” way to write it made it suck up all the RAM on the system. After less than 5 minutes I killed it with 1.7GB/2GB consumed. Running it on the aforementioned 8 core/32GB server had it consume 5GB before it finally crashed with a HTTP 500 error. I posted in the forums, got some advice, but still can’t get it working. (Read that short thread for the rest of the story). This seems like exactly what they should be nailing, but so far they’re coming up empty.

2 Comments »

Posted: March 9th, 2010 | Author: chmullig | Filed under: Nerdery | Tags: distributed, picloud, pyro, python | 1 Comment »

A couple weeks ago my coworker mentioned PiCloud. It claims to be “Cloud Computing. Simplified.” for python programming. Indeed, their trivial examples are too good to be true, basically. I pointed out how the way it was packaging up code to send over the wire was a lot like Pyro‘s Mobile Code feature. We actually use Pyro mobile code quite a bit at work, within the context of our own distributed system running across machines we maintain.

After getting beta access I decided to check it out today. I spent about 15 minutes playing around with it, and decided to do a short writeup because there’s so little info out there. The short version is that technically it’s quite impressive. Simple, but more complicated than square(x) cases are as easy as they say. Information about PiCloud is in pretty short supply, so here’s my playing around reproduced for all to see.

Installing/first using

This is pretty easy. I’m using a virtualenv because I was skeptical, but it’s neat how easy it is even with that. So I’m going to setup a virtualenv, install ipython to the virtualenv, then install the cloud egg. At the end I’ll add my api key to the ~/.picloud/cloudconf.py file so I don’t need to type it repeatedly. The file is created when you first import cloud, and is very straightforward.

chmullig@gore:~$ virtualenv picloud

New python executable in picloud/bin/python

Installing setuptools............done.

chmullig@gore:~$ source picloud/bin/activate

(picloud)chmullig@gore:~$ easy_install -U ipython

Searching for ipython

#snip

Processing ipython-0.10-py2.6.egg

creating /home/chmullig/picloud/lib/python2.6/site-packages/ipython-0.10-py2.6.egg

Extracting ipython-0.10-py2.6.egg to /home/chmullig/picloud/lib/python2.6/site-packages

Adding ipython 0.10 to easy-install.pth file

Installing iptest script to /home/chmullig/picloud/bin

Installing ipythonx script to /home/chmullig/picloud/bin

Installing ipcluster script to /home/chmullig/picloud/bin

Installing ipython script to /home/chmullig/picloud/bin

Installing pycolor script to /home/chmullig/picloud/bin

Installing ipcontroller script to /home/chmullig/picloud/bin

Installing ipengine script to /home/chmullig/picloud/bin

Installed /home/chmullig/picloud/lib/python2.6/site-packages/ipython-0.10-py2.6.egg

Processing dependencies for ipython

Finished processing dependencies for ipython

(picloud)chmullig@gore:~$ easy_install http://server/cloud-1.8.2-py2.6.egg

Downloading http://server/cloud-1.8.2-py2.6.egg

Processing cloud-1.8.2-py2.6.egg

creating /home/chmullig/picloud/lib/python2.6/site-packages/cloud-1.8.2-py2.6.egg

Extracting cloud-1.8.2-py2.6.egg to /home/chmullig/picloud/lib/python2.6/site-packages

Adding cloud 1.8.2 to easy-install.pth file

Installed /home/chmullig/picloud/lib/python2.6/site-packages/cloud-1.8.2-py2.6.egg

Processing dependencies for cloud==1.8.2

Finished processing dependencies for cloud==1.8.2

(picloud)chmullig@gore:~$ python -c 'import cloud' #to create the ~/.picloud directory

(picloud)chmullig@gore:~$ vim .picloud/cloudconf.py #to add api_key and api_secretkey

Trivial Examples

This is their trivial example, just to prove it’s as easy for me as it was for them.

In [1]: def square(x):

...: return x**2

...:

In [2]: import cloud

In [3]: cid = cloud.call(square, 10)

In [4]: cloud.result(cid)

Out[4]: 100

BAM! That’s just stupidly easy. Let’s try a module or two.

In [5]: import random

In [6]: def shuffler(x):

...: xl = list(x)

...: random.shuffle(xl)

...: return ''.join(xl)

...:

In [8]: cid = cloud.call(shuffler, 'Welcome to chmullig.com')

In [9]: cloud.result(cid)

Out[9]: ' etcmmhmoeWll.cgcl uioo'

Less-Trivial Example & Packages

So that’s neat, but what about something I wrote, or something that’s off pypi that they don’t already have installed? Also quite easy. I’m going to be using Levenshtein edit distance for this, because it’s simple but non-standard. For our purposes we’ll begin with a pure python implementation, borrowed from Magnus Lie. Then we’ll switch to a C extension version, originally written by David Necas (Yeti), which I’ve rehosted on Google Code.

(picloud)chmullig@gore:~$ wget -O hetlev.py http://hetland.org/coding/python/levenshtein.py

#snip

2010-03-09 12:13:04 (79.2 KB/s) - `hetlev.py' saved [707/707]

(picloud)chmullig@gore:~$ easy_install http://pylevenshtein.googlecode.com/files/python-Levenshtein-0.10.1.tar.bz2

Downloading http://pylevenshtein.googlecode.com/files/python-Levenshtein-0.10.1.tar.bz2

Processing python-Levenshtein-0.10.1.tar.bz2

Running python-Levenshtein-0.10.1/setup.py -q bdist_egg --dist-dir /tmp/easy_install-mqtK2d/python-Levenshtein-0.10.1/egg-dist-tmp-igxMyM

zip_safe flag not set; analyzing archive contents...

Adding python-Levenshtein 0.10.1 to easy-install.pth file

Installed /home/chmullig/picloud/lib/python2.6/site-packages/python_Levenshtein-0.10.1-py2.6-linux-x86_64.egg

Processing dependencies for python-Levenshtein==0.10.1

Finished processing dependencies for python-Levenshtein==0.10.1

(picloud)chmullig@gore:~$

Now both are installed locally and built. Beautiful. Let’s go ahead and test out the hetlev version.

In [18]: def distances(word, comparisonWords):

....: results = []

....: for otherWord in comparisonWords:

....: results.append(hetlev.levenshtein(word, otherWord))

....: return results

In [24]: zip(words, distances(word, words))

Out[24]:

[('kitten', 0),

('sitten', 1),

('sittin', 2),

('sitting', 3),

('cat', 5),

('kitty', 2),

('smitten', 2)]

Now let’s put that up on PiCloud! It’s, uh, trivial. And fast.

In [25]: cid = cloud.call(distances, word, words)

In [26]: zip(words, cloud.result(cid))

Out[26]:

[('kitten', 0),

('sitten', 1),

('sittin', 2),

('sitting', 3),

('cat', 5),

('kitty', 2),

('smitten', 2)]

Now let’s switch it to use the C extension version of edit distance from the PyLevenshtein package, and try to use it with PiCloud.

In [32]: import Levenshtein

In [33]: def cdistances(word, comparisonWords):

results = []

for otherword in comparisonWords:

results.append(Levenshtein.distance(word, otherword))

return results

....:

In [38]: zip(words, cdistances(word, words))

Out[38]:

[('kitten', 0),

('sitten', 1),

('sittin', 2),

('sitting', 3),

('cat', 5),

('kitty', 2),

('smitten', 2)]

In [39]: cid = cloud.call(cdistances, word, words)

In [40]: cloud.result(cid)

ERROR: An unexpected error occurred while tokenizing input

The following traceback may be corrupted or invalid

The error message is: ('EOF in multi-line statement', (30, 0))

ERROR: An unexpected error occurred while tokenizing input

The following traceback may be corrupted or invalid

The error message is: ('EOF in multi-line statement', (37, 0))

---------------------------------------------------------------------------

CloudException Traceback (most recent call last)

CloudException: Job 14:

Could not depickle job

Traceback (most recent call last):

File "/root/.local/lib/python2.6/site-packages/cloudserver/workers/employee/child.py", line 202, in run

File "/usr/local/lib/python2.6/dist-packages/cloud/serialization/cloudpickle.py", line 501, in subimport

__import__(name)

ImportError: ('No module named Levenshtein', <function subimport at 0x2290ed8>, ('Levenshtein',))

Installing C-Extension via web

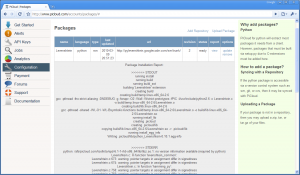

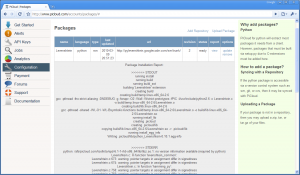

Not too surprisingly that didn’t work – Levenshtein is a C extension I built on my local machine. PiCloud doesn’t really make it obvious, but you can add C-Extensions via their web interface. Amazingly you can point it to an SVN repo and it will let you refresh it. It seems to download and call setup.py install, but it’s a little unclear. The fact is it just worked, so I didn’t care. I clicked on “Add Repository” and pasted in the URL from google code, http://pylevenshtein.googlecode.com/svn/trunk. It built it and installed it, you can see the output on the right. I then just reran the exact same command and it works.

Not too surprisingly that didn’t work – Levenshtein is a C extension I built on my local machine. PiCloud doesn’t really make it obvious, but you can add C-Extensions via their web interface. Amazingly you can point it to an SVN repo and it will let you refresh it. It seems to download and call setup.py install, but it’s a little unclear. The fact is it just worked, so I didn’t care. I clicked on “Add Repository” and pasted in the URL from google code, http://pylevenshtein.googlecode.com/svn/trunk. It built it and installed it, you can see the output on the right. I then just reran the exact same command and it works.

In [41]: cid = cloud.call(cdistances, word, words)

In [42]: cloud.result(cid)

Out[42]: [0, 1, 2, 3, 5, 2, 2]

In [43]: zip(words, cloud.result(cid))

Out[43]:

[('kitten', 0),

('sitten', 1),

('sittin', 2),

('sitting', 3),

('cat', 5),

('kitty', 2),

('smitten', 2)]

Slightly more complicated

I’ve written a slightly more complicated script that fetches the qwantzle corpus and uses jaro distance to find the n closest words in the corpus to a given word. It’s pretty trivial and dumb, but definitely more complicated than the above examples. Below is closestwords.py

import Levenshtein

import urllib

class Corpusinator(object):

'''

Finds the closest words to the word you specified.

'''

def __init__(self, corpus='http://cs.brown.edu/~jadrian/docs/etc/qwantzcorpus'):

'''Setup the corpus for later use. By default it uses

http://cs.brown.edu/~jadrian/docs/etc/qwantzcorpus, but can by overridden

by specifying an alternate URL that has one word per line. A number, a space, then the word.

'''

raw = urllib.urlopen('http://cs.brown.edu/~jadrian/docs/etc/qwantzcorpus').readlines()

self.corpus = set()

for line in raw:

try:

self.corpus.add(line.split()[1])

except IndexError:

pass

def findClosestWords(self, words, n=10):

'''

Return the n (default 10) closest words from the corpus.

'''

results = {}

for word in words:

tempresults = []

for refword in self.corpus:

dist = Levenshtein.jaro(word, refword)

tempresults.append((dist, refword))

tempresults = sorted(tempresults, reverse=True)

results[word] = tempresults[:n]

return results |

Very simple. Let’s try ‘er out. First locally, then over the cloud.

In [1]: import closestwords

In [2]: c = closestwords.Corpusinator()

In [3]: c.findClosestWords(['bagel', 'cheese'], 5)

Out[3]:

{'bagel': [(0.8666666666666667, 'barge'),

(0.8666666666666667, 'bag'),

(0.8666666666666667, 'badge'),

(0.8666666666666667, 'angel'),

(0.8666666666666667, 'age')],

'cheese': [(1.0, 'cheese'),

(0.95238095238095244, 'cheesed'),

(0.88888888888888895, 'cheers'),

(0.88888888888888895, 'cheeks'),

(0.8666666666666667, 'cheeseball')]}

Unfortunately it just doesn’t want to work happily with PiCloud & ipython when you’re running import closestwords. The most obvious won’t work, cloud.call(c.findClosestWords, [‘bagel’]). Neither will creating a tiny wrapper function and calling that within ipython:

def caller(words, n=10):

c = closestwords.Corpusinator()

return c.findClosestWords(words, n)

cloud.call(caller, ['bagel']) |

I created a stupidly simple wrapper python file, wrap.py:

import closestwords

import cloud

cid = cloud.call(closestwords.caller, ['bagel',])

print cloud.result(cid) |

That gives an import error. Even putting that caller wrapper above at the bottom of the closestwords.py and calling it in the __main__ section (as I do below with c.findClosestWords) didn’t work.

However if I stick it directly in closestwords.py, initialize the instance, then run it from there, everything is fine. I’m not sure what this means, if it’s supposed to happen, or what. But it seems like it could be a pain in the butt just to get it calling the right function in the right context.

if __name__ == '__main__':

import cloud

c = Corpusinator()

cid = cloud.call(c.findClosestWords, ['bagel',])

print cloud.result(cid) |

What passes for a conclusion

I had a good time playing with PiCloud. I’m going to look at adapting real code to use it. If I get carried away AND feel like blogging I’ll be sure to post ‘er up. They have pretty good first tier support for the map part of map/reduce, which would be useful. Two links I found useful when working with PiCloud:

Update 3/11

Aaron Staley of PiCloud wrote me a nice email about this post. He says my problem with the closestwords example was due to a server side bug they’ve fixed. In playing around, it does seem a bit better. A few ways I tried to call it failed, but many of them worked. I had trouble passing in closestwords.caller, either in ipython or the wrapper script. However re-defining caller in ipython worked, as did creating an instance and passing in the instance’s findClosestWords function. A+ for communication, guys.

In [3]: cid = cloud.call(closestwords.caller, ['bagel'])

In [4]: cloud.result(cid)

ERROR: An unexpected error occurred while tokenizing input

The following traceback may be corrupted or invalid

The error message is: ('EOF in multi-line statement', (30, 0))

ERROR: An unexpected error occurred while tokenizing input

The following traceback may be corrupted or invalid

The error message is: ('EOF in multi-line statement', (37, 0))

---------------------------------------------------------------------------CloudException: Job 36: Could not depickle job

Traceback (most recent call last):

File "/root/.local/lib/python2.6/site-packages/cloudserver/workers/employee/child.py", line 202, in run

AttributeError: 'module' object has no attribute 'caller'

In [8]: c = closestwords.Corpusinator()

In [9]: cid = cloud.call(c.findClosestWords, ['bagel', 'cheese'], 5)

In [10]: cloud.result(cid)

Out[10]:

{'bagel': [(0.8666666666666667, 'barge'),

(0.8666666666666667, 'bag'),

(0.8666666666666667, 'badge'),

(0.8666666666666667, 'angel'),

(0.8666666666666667, 'age')],

'cheese': [(1.0, 'cheese'),

(0.95238095238095244, 'cheesed'),

(0.88888888888888895, 'cheers'),

(0.88888888888888895, 'cheeks'),

(0.8666666666666667, 'cheeseball')]}

In [11]: def caller(words, n=10):

....: c = closestwords.Corpusinator()

....: return c.findClosestWords(words, n)

....:

In [12]: cid = cloud.call(caller, ['bagel'])

In [13]: reload(closestword)

KeyboardInterrupt

In [13]: cloud.result(cid)

Out[13]:

{'bagel': [(0.8666666666666667, 'barge'),

(0.8666666666666667, 'bag'),

(0.8666666666666667, 'badge'),

(0.8666666666666667, 'angel'),

(0.8666666666666667, 'age'),

(0.8222222222222223, 'barrel'),

(0.8222222222222223, 'barely'),

(0.81111111111111123, 'gamble'),

(0.79047619047619044, 'vaguely'),

(0.79047619047619044, 'largely')]}

Update 3/12

I did some more experimentation with PiCloud, posted separately.

1 Comment »

Posted: March 3rd, 2010 | Author: chmullig | Filed under: personal | Tags: cooking | No Comments »

These are some deliciously tasty scones that are incredibly easy to make. The recipe is from a former cowoker, Ryan. They just take a few minutes and aren’t messy or anything. We often substitute out up to half the flour with whole wheat. Presumably healthier and gives a nice flavor. It can fairly easily scale up or down (we usually make a half recipe for 2 people).

Scones, makes about a dozen.

Dry Ingredients:

- 2 cups flour (up to half whole wheat, if you want)

- 1/3 cup white sugar

- 1 tablespoon baking powder

- 1/2 teaspoon salt

Add 1 & 1/4 cups cream.

Mix with a fork until it forms a loose mass. Mold it into shape by hand, but be careful not to overmix.

Bake at 425 F until lightly brown on top and it passes the toothpick test. In a proper oven this is usually around 15 min. In a toaster oven it might be more like 20.

No Comments »

Posted: March 2nd, 2010 | Author: chmullig | Filed under: personal | Tags: BNC, fishboy, high school | 3 Comments »

Many years ago (2004) I was at Montgomery Blair HS, and was involved in the student TV/Radio station BNC. One of the project we did was this radio show, Fishboy. I was only minimally involved helping with some technical parts, but the guys who were involved did an awesome job. After Ben saw this article I remembered I still had these clips and had to post them here. Hopefully you can hear, and if not leave a comment/email.

Fishboy Ep 1

Fishboy Ep 2

Fishboy Ep 3

Fishboy Ep 4

Fishboy Ep 5

Fishboy Ep 6

3 Comments »

Posted: January 19th, 2010 | Author: chmullig | Filed under: Nerdery | Tags: python, trac | No Comments »

At work we use the Trac wiki/ticket/software dev management system. I have ended up as its primary maintainer here. We’ve had a few developers leave, and Trac had lots of tickets orphaned with the ex-employees as owner or polluting the CC list. I whipped together a python script using the XML-RPC plugin from trac-hacks. It leaves a nice comment (polluting the timeline some) but doesn’t send out emails by default.

import xmlrpclib, re

#connect

t = xmlrpclib.ServerProxy('https://user:password@tracserver.example/xmlrpc')

#regular expression for the CC field.

byere = re.compile('(?:jamie|dave|brad)(?:@DOMAIN1\.LOCAL|@DOMAIN1\.COM)?,? ?', re.IGNORECASE)

#This first loop sets the owner to blank, the status to 'new', and cleans the CC list for any open tickets assigned to Jamie or Dave. Doing the CC fix here makes the timeline a little cleaner.

for ticketid in t.ticket.query('owner=jamie@DOMAIN1.LOCAL&owner=dave@DOMAIN1.LOCAL&status=new&status=accepted&status=assigned&testing&status=planning'):

ticket = t.ticket.get(ticketid)

cclist = ticket[3]['cc']

newcclist = byere.sub('', cclist)

t.ticket.update(ticketid, '', {'owner' : '', 'status' : 'new', 'cc' : newcclist})

#This loop is just for those tickets where they appear on the CC list.

for ticketid in t.ticket.query('cc~=jamie&cc~=dave&cc~=brad&status!=closed'):

ticket = t.ticket.get(ticketid)

cclist = ticket[3]['cc']

newcclist = byere.sub('', cclist)

t.ticket.update(ticketid, '', {'cc' : newcclist}) |

Posted: December 15th, 2009 | Author: chmullig | Filed under: Nerdery | Tags: ascii, python, unicode | 1 Comment »

At work we maintain a SDA Archive for analyzing our panels and data. It’s nice, but unfortunately doesn’t support unicode, particularly in the dataset. We translate unicode characters in the metadata to XML character reference entities, which works well for displaying that (v3.4 supports UTF-8 for metadata). This is done simply by using the built in u’Some Unicode String’.encode(‘ascii’, ‘xmlcharrefreplace) as described here.

The data is a flat ASCII text file, and it has massive issues with multibyte unicode characters in the text. The resulting field shifts meant I needed to get the best ASCII equivalent of a unicode string I could. There are a few semi-acceptable solutions, but none really preserved as much data was I wanted. I ended up making an improved version of effbot’s unaccent.py. I was primarily concerned with German, Danish, Norwegian, Finnish and Swedish, but hopefully it’s generally the most complete around.

The usage is pretty simple, just import unaccent.py and call unaccent.asciify(thing). You’ll get back an ascii str, regardless of what you stick in. In rare cases it may raise an exception if it’s a non-unicode object that can’t be asciified, but it works for my needs.

# -*- coding: utf-8 -*-

# use a dynamically populated translation dictionary to remove accents

# from a string

import unicodedata, sys

class unaccented_map(dict):

# Translation dictionary. Translation entries are added to this dictionary as needed.

CHAR_REPLACEMENT = {

0xc6: u"AE", # Æ LATIN CAPITAL LETTER AE

0xd0: u"D", # Ð LATIN CAPITAL LETTER ETH

0xd8: u"OE", # Ø LATIN CAPITAL LETTER O WITH STROKE

0xde: u"Th", # Þ LATIN CAPITAL LETTER THORN

0xc4: u'Ae', # Ä LATIN CAPITAL LETTER A WITH DIAERESIS

0xd6: u'Oe', # Ö LATIN CAPITAL LETTER O WITH DIAERESIS

0xdc: u'Ue', # Ü LATIN CAPITAL LETTER U WITH DIAERESIS

0xc0: u"A", # À LATIN CAPITAL LETTER A WITH GRAVE

0xc1: u"A", # Á LATIN CAPITAL LETTER A WITH ACUTE

0xc3: u"A", # Ã LATIN CAPITAL LETTER A WITH TILDE

0xc7: u"C", # Ç LATIN CAPITAL LETTER C WITH CEDILLA

0xc8: u"E", # È LATIN CAPITAL LETTER E WITH GRAVE

0xc9: u"E", # É LATIN CAPITAL LETTER E WITH ACUTE

0xca: u"E", # Ê LATIN CAPITAL LETTER E WITH CIRCUMFLEX

0xcc: u"I", # Ì LATIN CAPITAL LETTER I WITH GRAVE

0xcd: u"I", # Í LATIN CAPITAL LETTER I WITH ACUTE

0xd2: u"O", # Ò LATIN CAPITAL LETTER O WITH GRAVE

0xd3: u"O", # Ó LATIN CAPITAL LETTER O WITH ACUTE

0xd5: u"O", # Õ LATIN CAPITAL LETTER O WITH TILDE

0xd9: u"U", # Ù LATIN CAPITAL LETTER U WITH GRAVE

0xda: u"U", # Ú LATIN CAPITAL LETTER U WITH ACUTE

0xdf: u"ss", # ß LATIN SMALL LETTER SHARP S

0xe6: u"ae", # æ LATIN SMALL LETTER AE

0xf0: u"d", # ð LATIN SMALL LETTER ETH

0xf8: u"oe", # ø LATIN SMALL LETTER O WITH STROKE

0xfe: u"th", # þ LATIN SMALL LETTER THORN,

0xe4: u'ae', # ä LATIN SMALL LETTER A WITH DIAERESIS

0xf6: u'oe', # ö LATIN SMALL LETTER O WITH DIAERESIS

0xfc: u'ue', # ü LATIN SMALL LETTER U WITH DIAERESIS

0xe0: u"a", # à LATIN SMALL LETTER A WITH GRAVE

0xe1: u"a", # á LATIN SMALL LETTER A WITH ACUTE

0xe3: u"a", # ã LATIN SMALL LETTER A WITH TILDE

0xe7: u"c", # ç LATIN SMALL LETTER C WITH CEDILLA

0xe8: u"e", # è LATIN SMALL LETTER E WITH GRAVE

0xe9: u"e", # é LATIN SMALL LETTER E WITH ACUTE

0xea: u"e", # ê LATIN SMALL LETTER E WITH CIRCUMFLEX

0xec: u"i", # ì LATIN SMALL LETTER I WITH GRAVE

0xed: u"i", # í LATIN SMALL LETTER I WITH ACUTE

0xf2: u"o", # ò LATIN SMALL LETTER O WITH GRAVE

0xf3: u"o", # ó LATIN SMALL LETTER O WITH ACUTE

0xf5: u"o", # õ LATIN SMALL LETTER O WITH TILDE

0xf9: u"u", # ù LATIN SMALL LETTER U WITH GRAVE

0xfa: u"u", # ú LATIN SMALL LETTER U WITH ACUTE

0x2018: u"'", # ‘ LEFT SINGLE QUOTATION MARK

0x2019: u"'", # ’ RIGHT SINGLE QUOTATION MARK

0x201c: u'"', # “ LEFT DOUBLE QUOTATION MARK

0x201d: u'"', # ” RIGHT DOUBLE QUOTATION MARK

}

# Maps a unicode character code (the key) to a replacement code

# (either a character code or a unicode string).

def mapchar(self, key):

ch = self.get(key)

if ch is not None:

return ch

try:

de = unicodedata.decomposition(unichr(key))

p1, p2 = [int(x, 16) for x in de.split(None, 1)]

if p2 == 0x308:

ch = self.CHAR_REPLACEMENT.get(key)

else:

ch = int(p1)

except (IndexError, ValueError):

ch = self.CHAR_REPLACEMENT.get(key, key)

self[key] = ch

return ch

if sys.version >= "2.5":

# use __missing__ where available

__missing__ = mapchar

else:

# otherwise, use standard __getitem__ hook (this is slower,

# since it's called for each character)

__getitem__ = mapchar

map = unaccented_map()

def asciify(input):

try:

return input.encode('ascii')

except AttributeError:

return str(input).encode('ascii')

except UnicodeEncodeError:

return unicodedata.normalize('NFKD', input.translate(map)).encode('ascii', 'replace')

text = u"""

##Norwegian

"Jo, når'n da ha gått ett stôck te, så kommer'n te e å,

å i åa ä e ö."

"Vasa", sa'n.

"Å i åa ä e ö", sa ja.

"Men va i all ti ä dä ni säjer, a, o?", sa'n.

"D'ä e å, vett ja", skrek ja, för ja ble rasen, "å i åa

ä e ö, hörer han lite, d'ä e å, å i åa ä e ö."

"A, o, ö", sa'n å dämmä geck'en.

Jo, den va nôe te dum den.

(taken from the short story "Dumt fôlk" in Gustaf Fröding's

"Räggler å paschaser på våra mål tå en bonne" (1895).

##Danish

Nu bliver Mølleren sikkert sur, og dog, han er stadig den største på verdensplan.

Userneeds A/S er en dansk virksomhed, der udfører statistiske undersøgelser på internettet. Den blev etableret i 2001 som et anpartsselskab af David Jensen og Henrik Vincentz.

Frem til 2004 var det primære fokus på at forbedre hjemmesiderne for andre virksomheder. Herefter blev fokus omlagt, så man også beskæftigede sig med statistiske målinger. Ledelsen vurderede, at dette marked ville vokse betragteligt i de kommende år, hvilket man ønskede at udnytte.

Siden omlægningen er der blevet fokuseret på at etablere meget store forbrugerpaneler. Således udgjorde det danske panel i 2005 65.000 personer og omfatter per 2008 100.000 personer.

I 2007 blev Userneeds ApS konverteret til aktieselskabet Userneeds A/S

Efterhånden er aktiviteterne blevet udvidet til de nordiske lande (med undtagelse af Island) og besidder i 2009 et forbrugerpanel med i alt mere end 250.000 personer bosat i de fire store nordiske lande.

Selskabet tegnes udadtil af en direktion på tre personer, der foruden Henrik Vincentz tæller Palle Viby Morgen og Simon Andersen.

De primære konkurrenter er andre analysebureauer som AC Nielsen, Analysedanmark, Gallup, Norstat, Synnovate og Zapera.

##Finnish

Titus Aurelius Fulvus Boionius Arrius Antoninus eli Antoninus Pius (19. syyskuuta 86 – 7. maaliskuuta 161) oli Rooman keisari vuosina 138–161. Antoninus sai lisänimensä Pius (suom. velvollisuudentuntoinen) noustuaan valtaan vuonna 138. Hän kuului Nerva–Antoninusten hallitsijasukuun ja oli suosittu ja kunnioitettu keisari, joka tunnettiin lempeydestään ja oikeamielisyydestään. Hänen valtakauttaan on usein sanottu Rooman valtakunnan kultakaudeksi, jolloin talous kukoisti, poliittinen tilanne oli vakaa ja armeija vahva. Hän hallitsi pitempään kuin yksikään Rooman keisari Augustuksen jälkeen, ja hänen kautensa tunnetaan erityisen rauhallisena, joskaan ei sodattomana. Antoninus adoptoi Marcus Aureliuksen ja Lucius Veruksen vallanperijöikseen. Hän kuoli vuonna 161.

#German

So heißt ein altes Märchen: "Der Ehre Dornenpfad", und es handelt von einem Schützen mit Namen Bryde, der wohl zu großen Ehren und Würden kam, aber nicht ohne lange und vielfältige Widerwärtigkeiten und Fährnisse des Lebens durchzumachen. Manch einer von uns hat es gewiß als Kind gehört oder es vielleicht später gelesen und dabei an seinen eigenen stillen Dornenweg und die vielen Widerwärtigkeiten gedacht. Märchen und Wirklichkeit liegen einander so nahe, aber das Märchen hat seine harmonische Lösung hier auf Erden, während die Wirklichkeit sie meist aus dem Erdenleben hinaus in Zeit und Ewigkeit verlegt.

12\xbd inch

"""

if __name__ == "__main__":

for i, line in enumerate(text.splitlines()):

line = line.strip()

print line

if line and not line.startswith('#'):

print '\tTrans: ', asciify(line).strip() |

Posted: December 4th, 2009 | Author: chmullig | Filed under: Nerdery | Tags: python, sysadmin | No Comments »

I often create little cron jobs that create backups of various things on linux systems. Typically there’s a baks dir that has a tarball for each day with some clever name like ‘bak-2009-12-04.tar.gz’. This works well, but it can be a little difficult to clean the directory up without moving to a much more complicated system. I wrote a little python script to help me sort out the ones I wanted to save and the ones I wanted to trash. It took me a while to find it, so I’m posting here for posterity.

The basic idea is it loops through all the files in the current directory and moves ones older than a certain date that don’t meet certain criteria to a trash folder. I have it configured to save every day for the last month, every week for the last 6 months, and the beginning of every month forever. You can then delete the trash manually, but it makes the script fairly non-destructive.

#!/usr/bin/env python

import os, time

def categorize(zeta):

for file, date in zeta:

if date.tm_mday == 1: #save the first bak for each month, no matter what

tosave.append(file)

elif time.localtime(time.time() - 15778463) > date: #if the file is over 6 months old delete it

tomove.append(file)

elif time.localtime(time.time() - 15778463) < date and date.tm_wday == 0: #if the file is less than 6 months old and made on a sunday than delete it

tosave.append(file)

elif time.localtime(time.time() - 2592000) < date: #if the file is less than a month old, save it

tosave.append(file)

else:

tomove.append(file)

def makeEasier(files):

zeta = []

for file in files:

if "tar" in file:

if file.startswith("trac"):

date = file[5:13]

elif file.startswith("svn"):

date = file[4:12]

else:

continue

date = time.strptime(date, "%Y%m%d")

zeta.append([file,date])

return zeta

def moveEm():

for file in tomove:

if file not in tosave:

try:

os.rename(path+'/'+file,path+"/trash/"+file)

except Exception, detail:

print "Error with", file, ":", detail

else:

print "Moved", file, "to trash"

if __name__ == "__main__":

path = os.getcwd()

tomove = []

tosave = []

files = os.listdir(path)

zeta = makeEasier(files)

categorize(zeta)

moveEm() |

Posted: October 17th, 2009 | Author: chmullig | Filed under: Nerdery | 1 Comment »

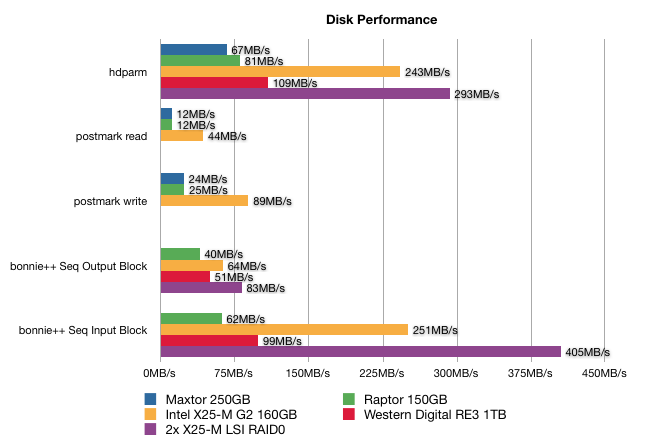

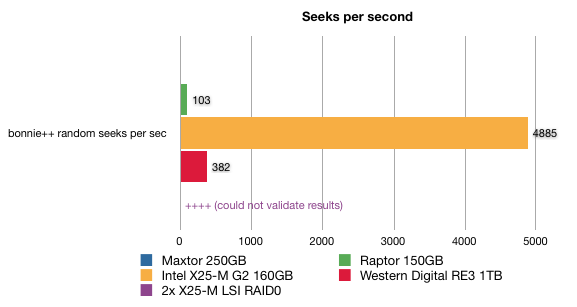

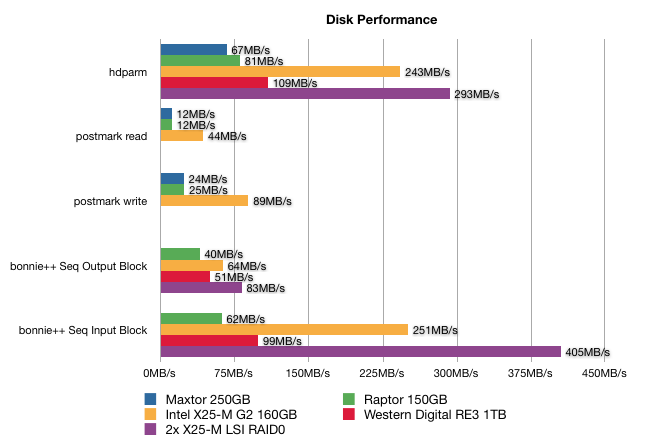

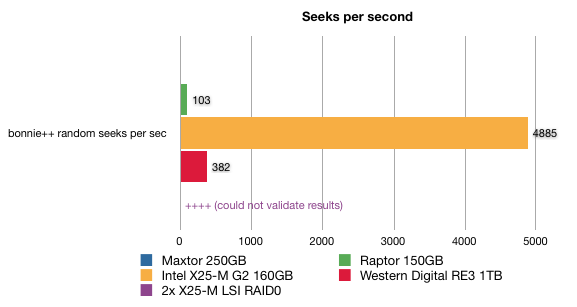

I’ve since updated some of this with a controller based RAID0 of the intel drives, as well as a RAID1 of Western Digital RE3 1TB drives.

At work we’re upgrading a linux server for a specific project. We’re replacing it’s pair of 150GB Western Digital Raptors in RAID1 with 2x 1TB in RAID1 and 2 Intel X-25M G2 Solid State Disks in RAID0. This software has always had issues with slow random seeks. We tried using 32GB flash drives for a similar problem, but even top of the line CF disks just weren’t fast enough.

The drives arrived today, and they look amazing. I did three fairly simple performance tests. The server has 2 Intel X5355 Xeon CPUs, for 8 cores at 2.66GHz and 32GB of RAM. /dev/sda is a 250GB Maxtor DiamondMax 10. /dev/sdb is the pair of 150GB VelociRaptors in a RAID1. /dev/sdc is the Intel X25M. I haven’t installed the second Intel yet. I also haven’t tweaked the settings at all, so I’m not positive NCQ and AHCI are enabled for the best performance. It’s also an older kernel, 2.6.20 on an older ubuntu. I’m using ext3, while I understand ext4 will provide some performance increases.

I ran three different benchmarks on the drives. First was a basic hdparm -tT to compare raw disk reads. The SSD was 3 times faster than the Raptor. Second was the postmark utility, which is supposed to be representative of a mail server workload. I’m slightly skeptical, but it appears the SSD was 3-4 times faster. Finally I ran bonnie++, with 63GB files. I only did it on the Raptor and SSD due to time, but the SSD was 2.2-4x faster for transfer speeds. The crazy part is bonnie++ says the SSD could do 47 times more seeks per second than the raptor. I fully admit I’m not familiar with bonnie or postmark, so if anyone has suggestions on other settings to use, please let me know.

Below are the raw results and commands I used to generate the info, in case anyone is curious.

hdparm results

chris@gore:~$ sudo hdparm -tT /dev/sda /dev/sdb /dev/sdc

/dev/sda: #Maxtor 250GB

Timing cached reads: 6020 MB in 2.00 seconds = 3011.94 MB/sec

Timing buffered disk reads: 202 MB in 3.00 seconds = 67.30 MB/sec

/dev/sdb: #Raptor RAID1

Timing cached reads: 6172 MB in 2.00 seconds = 3087.55 MB/sec

Timing buffered disk reads: 244 MB in 3.02 seconds = 80.88 MB/sec

/dev/sdc: #Intel X25M

Timing cached reads: 6164 MB in 2.00 seconds = 3083.54 MB/sec

Timing buffered disk reads: 730 MB in 3.00 seconds = 243.09 MB/sec

Postmark

chris@gore:~$ cat postmark.conf

set read 4096

set write 4096

set transactions 10000

set size 500 500000

set number 5000

#sda crappy SATA

chris@gore:/mnt/alt/$ postmark ~/postmark.conf

Time: 118 seconds total

97 seconds of transactions (103 per second)

Files: 9950 created (84 per second)

Creation alone: 5000 files (833 per second)

Mixed with transactions: 4950 files (51 per second)

5026 read (51 per second)

4974 appended (51 per second)

9950 deleted (84 per second)

Deletion alone: 4900 files (326 per second)

Mixed with transactions: 5050 files (52 per second)

Data: 1396.61 megabytes read (11.84 megabytes per second)

2861.03 megabytes written (24.25 megabytes per second)

#sdb raptor RAID1

chris@gore:~$ postmark ~/postmark.conf

Time: 116 seconds total

102 seconds of transactions (98 per second)

Files: 9950 created (85 per second)

Creation alone: 5000 files (1000 per second)

Mixed with transactions: 4950 files (48 per second)

5026 read (49 per second)

4974 appended (48 per second)

9950 deleted (85 per second)

Deletion alone: 4900 files (544 per second)

Mixed with transactions: 5050 files (49 per second)

Data: 1396.61 megabytes read (12.04 megabytes per second)

2861.03 megabytes written (24.66 megabytes per second)

#sdc SSD

chris@gore:/mnt/ssda/huge$ postmark ~/postmark.conf

Time: 32 seconds total

28 seconds of transactions (357 per second)

Files: 9950 created (310 per second)

Creation alone: 5000 files (1666 per second)

Mixed with transactions: 4950 files (176 per second)

5026 read (179 per second)

4974 appended (177 per second)

9950 deleted (310 per second)

Deletion alone: 4900 files (4900 per second)

Mixed with transactions: 5050 files (180 per second)

Data: 1396.61 megabytes read (43.64 megabytes per second)

2861.03 megabytes written (89.41 megabytes per second)

#bonnie++ -b

Raptor RAID1,63G,40986,55,36001,10,26842,4,60763,71,63846,5,102.9,0,16,79,0,+++++,+++,79,0,80,0,+++++,+++,79,0

Single X25-M,63G,65092,86,79769,22,60024,10,86899,98,256937,21,4884.9,10,16,2069,5,+++++,+++,2147,4,2362,5,+++++,+++,2193,4

Western Digital 1TB RE3 RAID1,63G,51726,64,49560,8,46897,8,48455,71,101728,9,381.6,0,16,+++++,+++,+++++,+++,+++++,+++,+++++,+++,+++++,+++,+++++,+++

2x X25-M RAID0,63G,84487,99,179178,26,96480,16,58096,85,414760,38,+++++,+++,16,+++++,+++,+++++,+++,+++++,+++,+++++,+++,+++++,+++,+++++,+++

1 Comment »

Posted: May 2nd, 2006 | Author: chmullig | Filed under: personal | No Comments »

I’m not in California anymore!

I’m not in California anymore!

The short version is that I’m moving back to DC/MD. Right now I’m in a holiday inn in Evanston, Wyoming (at least I’m not in Utah anymore!).

For those interested, check out my MPG (since I first bought the car). Spikes and dips generally due to poor accounting.

No Comments »

Posted: April 1st, 2006 | Author: chmullig | Filed under: Nerdery | No Comments »

Jamie, Dave and I managed to get our stupid little idea onto /. today. We were first on the page from about 1pm to 2pm eastern, slowly sliding down throughout the day. A big thanks goes to Dan/mcgreen for submitting the story that finally did it. We were also spotted on digg (twice thrice), reddit, waxy (his conclusion? We were one of two that made him laugh) and others.

So I figured I would take a moment to share about how this all came to be. Back in December I was talking with the programmers at the office. Making fun of various web frameworks and other suitably dorky things. Apparently a few minutes before December 7, 2005 12:38:56 PST I made a joke similar to “Well, if ruby is better on rails why not any language? Like SQL.” and we all proceeded to laugh. Then I went back to my desk and paid a whole $8 to register the domain. Hey, it’s pretty funny, right? We all thought it would be great fun to set it up for an April Fool’s joke or something.

Insert here 4 months worth of nothing happening except an occasional mention. In March it may have escalated to “Hey, we should do that soon.”

Then finally on the 29th Jamie decides to kick into gear. We formulate a plan. I redirect the DNS to Jamie’s dedicated servers and we become a mirror of the Ruby on Rails site. Jamie does some quick changes, including the logo and mast, and a few other little things. Everyone works (the for pay kind). We agree that the next evening we’ll all get together at Jamie’s place and, after celebrating Dave’s birthday, produce a screencast and finish the site.

The 30th was crazy at work. We leave and finally get started sometime close to 10. I’m on my MacBook Pro, Dave is on his iBook and Jamie on his G5. Macs are in these days. Jamie is working on the site, primarily doing the photoshop work. Dave and I are working together on the screencast – he’s doing the pages, I’m doing the backend side like sore_init, sor_import and setting up textmate. I turn on the built in apache and give dave SSH + admin, so he can log in over SSH from his computer and make the magic happen. Our approach is to record the video while narrating for practice. Then we will record a voice over separately.

At 2:21 AM and about eight attempts later this proved successful. We had a 6.4GB uncompressed .mov (and 16MB compressed one) with only one big mistake – garage band crashed in the middle. Luckily nothing was happening on screen so we decide to fix it in post. Dave proceeds to take a nap while I play with the video. The MBP’s hard drive isn’t quite fast enough to play the massive uncompressed video, so I transfer it to Jamie’s G5. Our approach here is simple – the video will play on the screen and dave and I will provide commentary to be recorded in Logic. It goes well and we do pretty good on the first (and only) take. With a little editing magic jamie makes us sound practically good. Audio is then (4:45AM by now) exported to a wav file and copied to my MBP. We then sleep.

Next day, things are crazy at work for me but very quiet for the programmers. The other nerds in the company love it. Jamie and Dave make some improvements to the site though out the day. I formulate a plan to get a usable version of the screencast (remember audio and video still aren’t in one file, and there are still two mistakes). We can hopefully make a perfect version on my iBook, but if there are issues I want to at least get something. There’s an apple store one block from the office. I’m quite handy with final cut pro. The files are very small. Add these together and you get some shacking (thanks to Mike for shopping + hacking = shacking).

The three of us traipse in there with a USB drive containing all the files we need and go up to a dual G5 towards the end. I quickly copy the files into the home directory, set up a new profile in FCP and import the files. We then have to render the video, a 10 minute process. Jamie stays at the computer surfing the web, while Dave and I go over and look at the iPods. After rendering, I come back to sync up the audio and video (difficult with only a very quiet left channel), loop some video on the front and end, and chop out the garage band crash (it happens after we jump to line 54321, just before we do the sor_page snippet). I then quickly export and walk away for the 3 minutes that takes. We copy the files to the USB drive, plug it into another computer to test, and leave.

I work on work stuff while Jamie and Dave intersperse a little more website tweaking, including the remaining example projects. Jamie has about 2 hours more work on the site to do, Dave needs to create the tarball for people to download, and I plan on redoing the editing on the screencast.

At home my trusty iBook (yay for PPC) launches FCP HD just fine and, running off a respectable external drive, isn’t even that slow. I redo what we did at the apple store but a little cleaner (and using the uncompressed original file, not the compressed version). Then I add the title and end slates with the background fade. Then I start to get fancy. You see the one major problem we had with the screencast was the 404 page. It says Apache on it, ruining the whole illusion. I put white matte covering the two sections where it appears, then carefully overlaid a text with a proper “SQL/Development” identifier. Declaring it complete I exported a two versions of differing quality, at about 22MB and 48MB each. These get sent to Jamie, and I relax.

After returning from a Warriors game at sometime close to midnight Jamie puts in a heroic effort getting the screencast and download pages working, as well as the little Ajaxy pop ups for the links and a few other configuration things. Dave and I are already in bed. I’ve contacted a bunch of east coasters and asked them to promote the site in the morning, and Dave is planning on getting up at 7.

I slept in on Saturday. I hadn’t gotten a good nights sleep in 5 days, so I didn’t really wake up until 10AM. My cell phone was ringing. I didn’t answer, but saw it was Dave. I got a glass of water and called him back. “Have you seen slashdot?” “Uh, no.” I answer. I pretend I’m not thinking what I’m actually thinking, “Holy crap we made the front page.” My MBP slowly comes back from “deep sleep” and I load up slashdot. There, in bright pink letters:

It turns out a guy who goes to my high school (and does many of the same activities I do, as well as a friend of my brother back in middle school) posted, and cowboy neal posted it. Amazing. At that point it had just hit after a couple of Dave’s rejected posts, with only a few comments. You can see that traffic, shall we say, spiked. Within a few hours we had over 20 THOUSAND visitors. The site was still up, although the hosting facility was a bit bogged down, so pages loads were slow.

We continued to try to pimp the page throughout the day. I sent an email to DHH, the creator of Ruby on Rails, and he responded that it was, “Very funny. Great April’s 1st joke!” Most of the comments were entirely positive, except a few generally insulting all April 1st jokes. Some of my favorites are the Ruby on Rail’s list and the /. comments that noticed a bunch of our details (especially Jamie’s hacked server response. That’s dedication.). Anyone know if Guido found it? Below is a list of references I found, including a bunch in Korean. Ok…. If you find any more shoot an email.

Referrers and other found links.

No Comments »